News

New study offers data-driven approach to identify struggling students early in the term

24 December 2025

A new study published in Computer Applications in Engineering Education by Dr Yiwei Sun from the School of Engineering and Materials Science demonstrates how engineering educators can use statistical analysis of routine assessment data to identify at-risk students and pinpoint which topics need more teaching time.

Three groups of students, three different needs

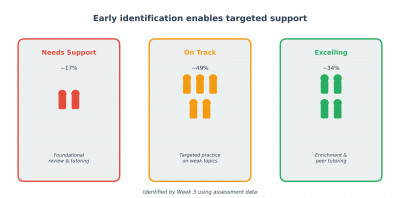

Analysing over 446,000 student responses across 110 mathematical skills, the study reveals that students naturally fall into three distinct performance groups: a low-ability group (around 17%) who need foundational support before tackling complex problems, a middle group (around 49%) who benefit from targeted practice on specific weak topics, and a high-ability group (around 34%) who need enrichment activities rather than repetition.

"What's striking is that we can identify which group a student belongs to by week 3 of the term," said Dr Sun. "Students scoring below 30% on foundational topics in early assessments likely need immediate intervention—before they fall irreversibly behind."

A practical tool for engineering educators

Unlike machine learning methods that produce opaque predictions, this hierarchical Bayesian approach gives instructors results they can directly understand and act upon. The analysis tells you not just that a student is struggling, but why—whether it's a specific mathematical prerequisite or a particular topic that's proving unexpectedly difficult.

The study found that mathematical prerequisite skills like "Percent Discount" and "Quadratic Formula" proved more challenging than basic geometry topics—suggesting students struggle more with mathematical foundations than engineering principles themselves.

How colleagues can apply this to their modules

The approach requires only standard assessment data—correct or incorrect responses mapped to topics or skills—making it applicable to any module with problem sets, quizzes, or online homework systems.

Dr Sun suggests three immediate applications for colleagues:

- Early warning system: Use cumulative performance data from the first few weeks to flag students who may need additional support

- Curriculum rebalancing: Identify which topics students find unexpectedly difficult and allocate more teaching time accordingly

- Smarter practice design: For struggling students, replace volume-based homework with worked examples and scaffolded problems

"The analysis can be run periodically throughout a semester," Dr Sun added. "I'm happy to share the Python scripts with colleagues who want to try this approach with their own module data."

| Contact: | Dr Yiwei Sun |

| Email: | yiwei.sun@qmul.ac.uk |

| Website: | |

| People: | Yiwei SUN |